1. Introduction¶

In order to meet the user’s demand for system real-time, the official supports upgrading Linux to RTLinux based on the kernel of the SDK source code.Our RTlinux support has preempt and xenomai two versions, the following preempt version to test.

1.1. RTLinux system firmware support¶

Support RK3562, RK356X and RK3588 chip platforms, you can go to the corresponding machine version download firmware page to download real-time firmware. If you need source code, please contact business.

1.2. Test real-time effects¶

Testing real-time performance requires cyclictest, which can be installed using apt.

apt update

apt install rt-tests

1.2.1. Test the real-time effect of RTLinux¶

Use stress or stress-ng to simulate a common stress scenario so that the CPU is at full load.

# # Run cpu, io, and memory load threads based on the number of cores

stress --cpu 4 --io 4 --vm 4 --vm-bytes 256M --timeout 259200s &

Execute the following command to test each core real-time response delay, which will be tested for a period of 3 days, and the test results after the test is completed will be output to the output file.

cyclictest -m -S -p99 -i1000 -h800 -D3d -q > output

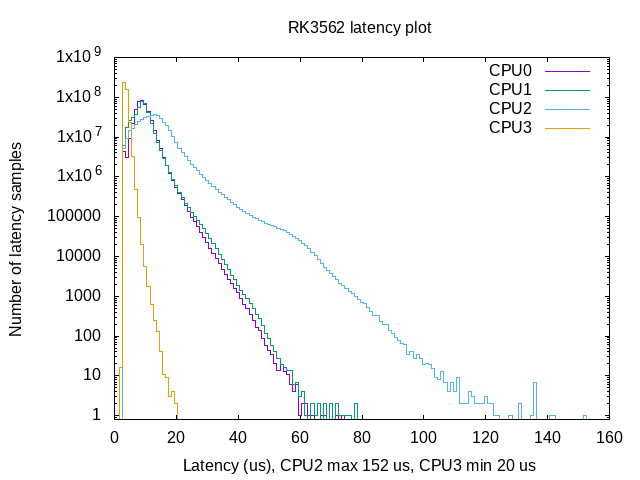

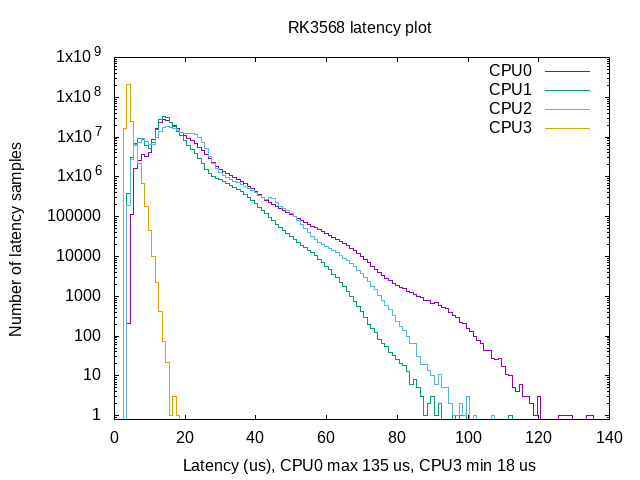

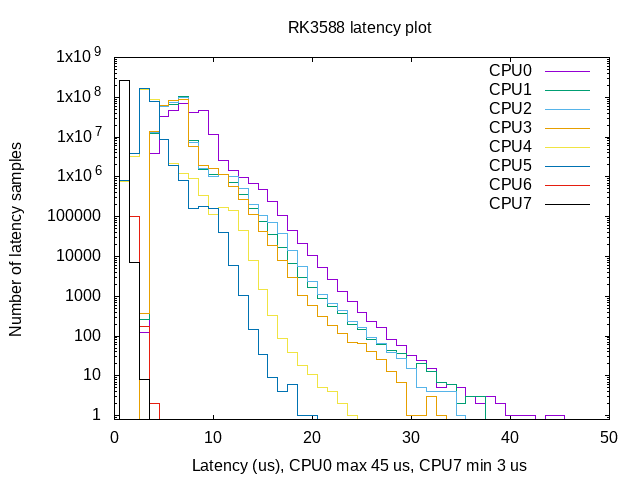

We performed the above operations on rk3568, rk3562, and rk3588 for a 3-day latency test. rk3568 and rk3562 remove CPU3 from the kernel SMP balancing and scheduling algorithm, while rk3588 removes CPU6 and CPU7 from the kernel SMP balancing and scheduling algorithm in order to observe the best real-time performance of the chip.

For easy observation, we converted the test results of rk3568, rk3562, and rk3588 into histograms and tables as shown below.

In this test, the values of ‘Max Latencies’ for CPU2 cores are the largest in RK3562, which is 152us, and the values of ‘Max Latencies’ for isolated CPU3 cores (cores excluded from kernel SMP balancing and scheduling algorithms) are the smallest, which is 20us. The values of ‘Max Latencies’ for the CPU0 core of RK3568 are maximum, 135us, and the values of ‘Max Latencies’ for the isolated CPU3 core are minimum, which is 18us. The values of ‘Max Latencies’ for the CPU0 core of RK3588 are maximum, 45us, and the values of ‘Max Latencies’ for the isolated CPU7 core are minimum, which is 3us.

It can be seen that the real-time performance of isolating the core under the same chip platform is the best. So bind our real-time tasks to the isolated core to run for the best real-time results.

| Latencies \ Core | CPU0 | CPU1 | CPU2 | CPU3 |

|---|---|---|---|---|

| Total | 420738794 | 420738789 | 420738773 | 420738757 |

| Min Latencies | 00003 | 00003 | 00003 | 00002 |

| Avg Latencies | 00009 | 00008 | 000012 | 00003 |

| Max Latencies | 00074 | 00078 | 00152 | 00020 |

| Latencies \ Core | CPU0 | CPU1 | CPU2 | CPU3 |

|---|---|---|---|---|

| Total | 259200000 | 259199935 | 259199824 | 259199769 |

| Min Latencies | 00004 | 00003 | 00003 | 00003 |

| Avg Latencies | 000017 | 000015 | 000017 | 00004 |

| Max Latencies | 000135 | 000112 | 00107 | 00018 |

| Latencies \ Core | CPU0 | CPU1 | CPU2 | CPU3 | CPU4 | CPU5 | CPU6 | CPU7 |

|---|---|---|---|---|---|---|---|---|

| Total | 259200000 | 259199978 | 259199943 | 259199926 | 259199914 | 259199930 | 259199871 | 259199898 |

| Min Latencies | 00003 | 00003 | 00003 | 00003 | 00001 | 00001 | 00001 | 00001 |

| Avg Latencies | 00007 | 00006 | 00006 | 00006 | 00003 | 00003 | 00001 | 00001 |

| Max Latencies | 00045 | 00037 | 00035 | 00033 | 00024 | 00020 | 00004 | 00003 |

1.2.1.1. Other stress scenarios¶

The latency test results of different scenarios are different. In order to be as close as possible to our production environment, other stress scenarios can be made at the same time, such as:

Manufacturing network load:

#Use iperf for simultaneous upstream and downstream testing to keep the transmit and receive of the network card at full load.

iperf -c 192.168.1.220 -p 8001 -f m -i100 -d -t 800000

Manufacturing GPU load:

#Run indefinitely, looping from the last benchmark back to the first.

glmark2-es2-wayland --run-forever

1.2.2. Cyclictest standard test¶

The threads option (-t) is used to specify the number of measurement threads that Cyclictest will use when detecting latency. Typically, running only one measurement thread per CPU on a system is a standard test scenario. The cpu on which the thread must execute can be specified with the affinity option (-a).

These options are critical to minimize the impact of running Cyclictest on the observed system. When using Cyclictest, it is important to ensure that only one measurement thread is executing at any given time. If the expected execution times of two or more Cyclictest threads overlap, then Cyclictest’s measurement will be affected by the latency caused by its own measurement thread. The best way to ensure that only one measurement thread executes at a given time is to execute only one measurement thread on a given CPU.

For example, if you want to profile the latency of three specific CPUs, specify that those CPUs should be used (with the -a option), and specify that three measurement threads should be used (with the -t option). In this case, to minimize Cyclictest overhead, make sure that the main Cyclictest thread collecting metrics data is not running on one of the three isolated CPUs. The affinity of the main thread can be set using the taskset program, as described below.

1.2.2.1. Reduce the impact of cyclictest when evaluating latency on an isolated set of CPUs¶

When measuring latency on a subset of CPUs, make sure the main Cyclictest thread is running on CPUs that are not being evaluated. For example, if a system has two CPUs and is evaluating latency on CPU 0, the main Cyclictest thread should be pinned on CPU 1. Cyclictest’s main thread is not real-time, but if it executes on the CPU being evaluated, it may have an impact on latency because there will be additional context switches. After starting Cyclictest, the taskset command can be used to restrict the main thread to execute on a certain subset of CPUs. For example, the latency test for CPU1 to CPU3:

#CPU1 to CPU3 run real-time programs, the main line runs on CPU0 (the cyclictest compiled by the board is required, otherwise the three real-time threads will only run on CPU1, CPU2 and 3 do not)

taskset -c 0 ./cyclictest -t3 -p99 -a 1-3

The taskset program can also be used to ensure that other programs running on the system do not affect latency on the isolated CPU. For example, to start the program top to see threads pinned to CPU 0, use the following command:

taskset --cpu 0 top -H -p PID

After #top is opened, click the f key, move the cursor to the P option, select the space, and then click q to exit, you can see which CPUs the real-time thread is running on.

1.3. Improve real-time strategy¶

1.3.1. Suppress console messages and disable memory overcommitment¶

#You can use the kernel parameter quiet to start the kernel, or suppress it after startup, as follows:

echo 1 > /proc/sys/kernel/printk

#Disable memory overcommit to avoid latency from Out-of-Memory Killer

echo 2 > /proc/sys/vm/overcommit_memory

1.3.2. Do not use a desktop or use a lightweight window manager¶

For better real-time, we do not recommend using a system with a desktop, as this will bring a big challenge to the CPU latency. It is recommended to use minimal ubuntu, your own QT program, etc. The rt firmware of the rk356x does not use a desktop by default, but instead uses the window manager weston, and the display protocol is Wayland.

1.3.2.1. Switch the X11 environment¶

If you need an X11 environment, you can switch to X11 manually.

sudo set_display_server x11

reboot

#sudo set_display_server weston #You can switch back to weston again and restart.

1.3.2.2. Start using the openbox window manager¶

Switching to an X11 environment uses the desktop by default, if you need to use a lightweight window manager.

In the/etc/lightdm/lightdm. Conf specified ession using openbox window manager:

cat /etc/lightdm/lightdm.conf.d/20-autologin.conf

[Seat:*]

user-session=openbox

autologin-user=firefly

1.3.2.3. Run only your own X11 program¶

If you do not use the login manager to start the X display service, you can use xinit to start the Xorg display service manually.

When xinit and startx are executed, they look for ~/.xinitrc to run as a shell script to start the client program.

If ~/.xinitrc does not exist, startx will run the default /etc/x11/xinit/xinitrc (the default xinitrc starts a Twm, xorg-xclock, and Xterm environment).

Start by shutting down the lightdm service.

systemctl disable lightdm

Then start your own program using startx.

startx chromium

You can also modify the xinitrc file of the client specified by default startx, which runs Xorg by default.

vim /etc/X11/xinit/xinitrc

-------------------------------------------------------------

#!/bin/sh

# /etc/X11/xinit/xinitrc

#

# global xinitrc file, used by all X sessions started by xinit (startx)

# invoke global X session script

#. /etc/X11/Xsession

#chromium --window-size=1920,1080

chromium --start-maximized

1.3.3. Binding core¶

Events with high real-time requirements are fixed to a certain core for processing, and systems and other events with low real-time requirements are concentrated on one core for processing. Events such as specific interrupts, real-time programs, etc. can be serviced by dedicated cores.

1.3.3.1. Task binding core¶

rt applications can be processed by a specific core that binds rt applications to cpu3.

taskset -c 3 rt_task

1.3.3.2. breaks the binding core¶

Because arm handles all peripheral interrupts entirely by cpu0, for important interrupts, you can bind interrupts to other cores after the system starts. For example, bind the eth0 interrupt to cpu2.

root@firefly:~# cat /proc/interrupts | grep eth0

38: 28600296 0 0 0 GICv3 64 Level eth0

39: 0 0 0 0 GICv3 61 Level eth0

root@firefly:~# cat /proc/irq/38/smp_affinity_list

0-3

root@firefly:~# echo 2 > /proc/irq/38/smp_affinity_list

root@firefly:~# cat /proc/irq/38/smp_affinity_list

2

root@firefly:~# cat /proc/interrupts | grep eth0

38: 29009292 0 52859 0 GICv3 64 Level eth0

39: 0 0 0 0 GICv3 61 Level eth0

1.3.4. Use the smp+amp scheme¶

For more real-time requirements, you can use amp solutions to achieve better real-time control.

The rk3568 supports amp (Asymmetric Multi-core architecture), which allows you to customize certain core running custom systems.

For example, 0 ~ 2 cores run kernel, 3 cores run rt-thread, etc. Supports Linux(Kernel-4.19, rt-kernel-4.19),

Baremetal(HAL) and RTOS(RT-Thread) can be combined with AMP construction forms.

Inter-kernel communication can be used to exchange information between different cores.